IMPORTANCE OF CONTROL QUALITY OF DATA

July 22, 2025

It is very known the old saying: “garbage in, garbage out”.

If we want to obtain the better results from our data, we need to verify that data is optimum for being transformed, interpreted and be used as input for calculations and modeling.

Data Quality is not optional, it is really MANDATORY! We should not simply believe in the received data is ready to be input for our interpretation. We need to review it previously in order to observe if the results are consistent and physically possible.

This step is for all our data: geological maps, drilling reports, previous interpretations, well logs, seismic gathers, and seismic stacked volumes.

This stage is performed by the complete team: geologists, petrophysicists, and geophysicists.

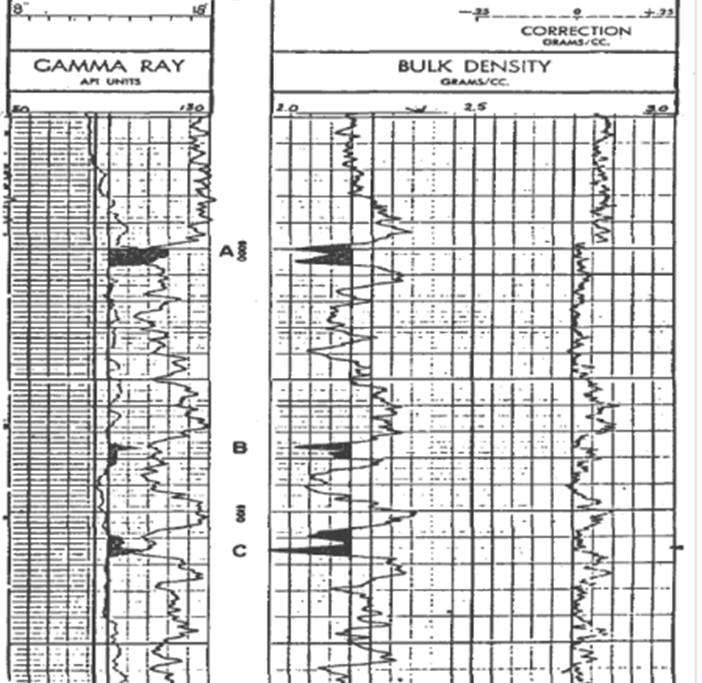

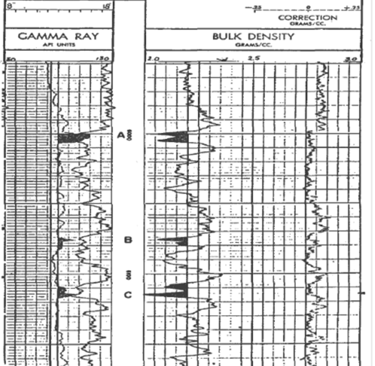

Going deeply into geophysics, the key data to review are the main logs used in seismic interpretation, starting from sonic and density logs. If we want to create a confident velocity curve for the wells, it is necessary to merge the registered logs (which many times are registered in multiple runs, with strong spikes among the change of logging step) in order to unify criteria and obtain a drift with logical velocity values. It must be considered also the stability of the hole, because in unconsolidated formations it is feasible the formation of caverns which can lead to unusual values. In this case it is useful to establish a velocity trend. Density logs are strongly sensitive to the presence of caverns, due to its definition as a contact tool (it needs to be in contact with hole’s wall in order to measure correctly). This step is performed mainly for the petrophysicists, who give the valid (or official) logs to use.

Impact of hole’s rugosity on density log. Image from https://oilproduction.net/files/Well%20Logs%20Quality%20Control%20Issues.pdf (Well Logs Quality Control Issues. Zaki Bassiouni).

It is also important to verify the previous geological reports. Sometimes the legacy wells are very old, with an old fancy nomenclature. The geologists unify the top interpretation criteria to validate or reform the previous interpretation. They also define the schematic geological model, which needs the structure provided by the geophysicists.

The geophysical interpretation is fed by the well logs to create the synthetic seismograms and perform the well-tie. For this reason the “official” logs provided by the petrophysicists are used by input. Unconditioned well logs can lead to wrong time/depth curves and to obtain wrong events which cannot be correlated with the actual seismic data.

Regarding seismic data, we can divide it in prestack and poststack.

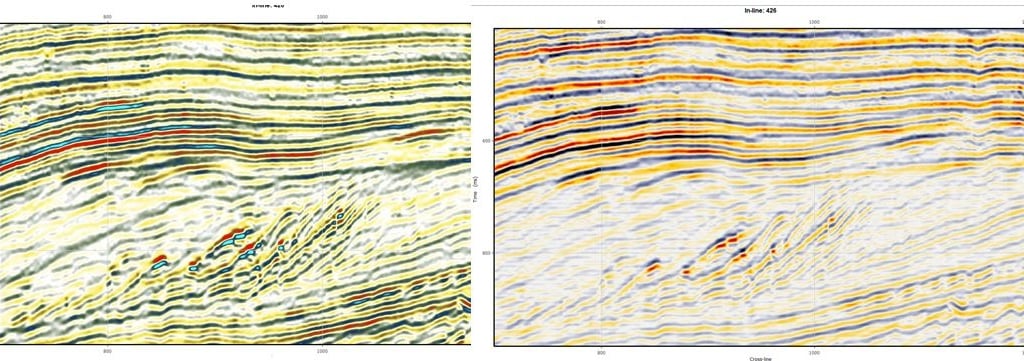

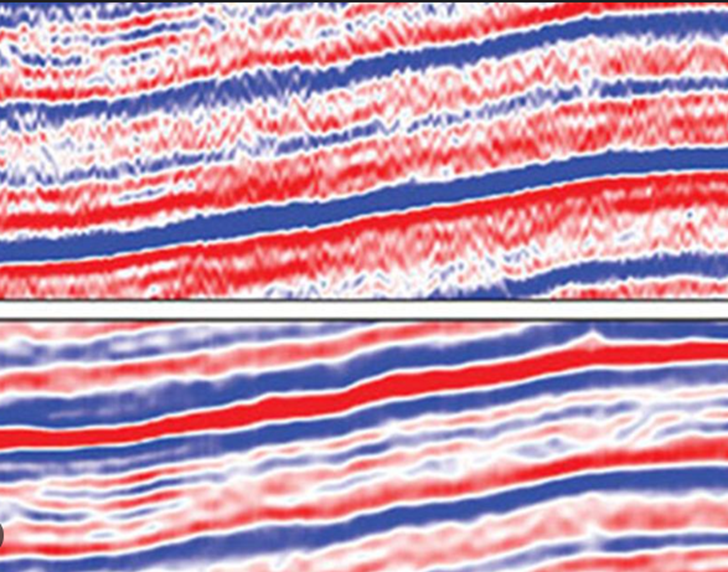

For poststack data, the main idea is to improve the image quality and generate “smoothed” surfaces, taking into account the seismic resolution. Generally speaking, the seismic surfaces obtained from a horizon picking have a lot of irregularities and sometimes generate misinterpretation of structures. Additionally, these structures are hard to expand properties when we try to create a velocity model or an impedance volume. When we work with stacked volumes, it is ideal to obtain a smoothed version of the legacy data. This smoothing is performed through structural filtering, although sometimes it is necessary also to apply a frequency filtering (WARNING: we must be very careful when we work with the frequencies in order to not eliminate authentic signal). The smoothing can lead us to generate cleaner horizons and faults which will be input for the later stages of reservoir characterization. The smoothed volumes must be used (instead of original legacy volumes) as input for attributes calculation (for example amplitude, ant-tracking, similarity, instantaneous phase, instantaneous frequency, etc.). The smoothing could be a standard step for our workflow.

Difference among a legacy and a smoothed seismic section. The obtantion of seismic attributes from the legacy can contain artifacts and to distort the similarity volumes (with heavy impact when we obtain fault volumes with Machine Learning tools). Image from https://explorer.aapg.org/story/articleid/12428/causes-and-appearance-of-noise-in-seismic-data-volumes

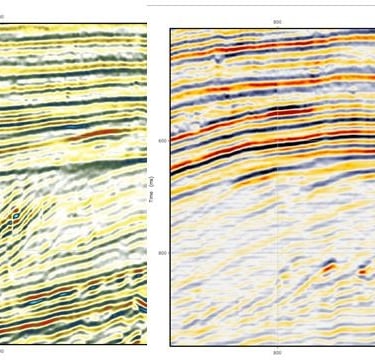

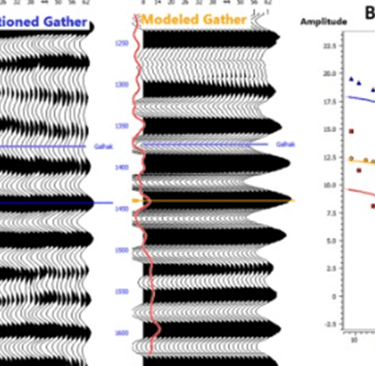

For prestack data, and especially when we want to perform a seismic characterization, it is necessary taking into account that seismic processing of a volume can have multiple targets, and for obtaining the best quality/price ratio the gathers could be misaligned in certain seismic events. When we want to evaluate AVO/AVA attributes or generate a Prestack inversion the gathers must be corrected and conditioned. The conditioning can imply certain steps as trim statics and sometimes radon transform.

Differences among crude gather and conditioned gather and its impact on results. Image from Quer et al, Porosity estimation and reservoir favorite zone extraction through pre-stack seismic AVO inversion: A case study of the galhak-oil formation in the Rawat Basin, Sudan (https://www.sciencedirect.com/science/article/abs/pii/S0926985125001351)

Once our data has been reviewed and conditioned by the team, we can be confident on the results. The QC data must not be omitted in the workflow.

In QeenkenGeo we can help your company to perform QC in order to improve your results. Don't hesitate to contact us.

hashtag: #QCdata; #DataConditioning, #WelllogsEdit